Auditing content on Wikipedia is a critical process to ensure the reliability, accuracy, and neutrality of the information presented. Here's a breakdown of how Wikipedia auditing works:

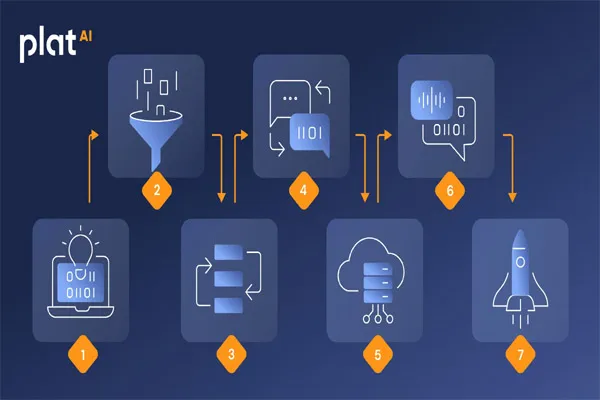

1. Monitoring and Review Process

- Community-Driven Monitoring: Wikipedia relies on a community of volunteer editors who constantly monitor edits and updates made to articles. This community is instrumental in spotting errors, biases, or vandalism.

- Recent Changes Feed: Wikipedia maintains a "Recent Changes" page, where editors can quickly review any new edits made across the platform. This allows for near-real-time auditing of content.

- Watchlists: Users can add articles to their "watchlists" to receive notifications when changes are made, enabling specific tracking of content that may require closer scrutiny.

2. Content Quality Assessments

- Wikipedia’s Content Rating System: Articles are often tagged with various quality assessment tags like "Stub," "Start," "Good Article," and "Featured Article." These tags help guide editors to focus on improving articles that may need more attention or clarification.

- Automatic and Manual Reverts: Content flagged as inaccurate or vandalized is subject to being reverted by either bots or human editors, who will restore previous versions of the page that adhered to Wikipedia’s guidelines.

3. Neutrality and Source Validation

- Neutral Point of View (NPOV): Articles on Wikipedia are expected to maintain a neutral point of view. Editors are encouraged to audit content to ensure it’s free of bias, promotional language, or personal opinions.

- Citations and Sources: Every claim in a Wikipedia article must be supported by reliable, verifiable sources. Auditors check whether references are valid, relevant, and up-to-date. If the sources are questionable or non-existent, the claim may be removed or tagged for further verification.

4. Vandalism Detection and Prevention

- Bots: Wikipedia uses bots that automatically detect and revert common forms of vandalism, such as inserting offensive language or completely irrelevant information.

- Human Auditing: In cases where bots can't fully handle the complexities of changes, human auditors examine the content and remove any harmful alterations.

5. Dispute Resolution

- Talk Pages: Editors may use talk pages associated with each article to discuss contentious edits or disputes over the accuracy of information. These discussions help determine consensus on controversial topics.

- Wikipedia Arbitration Committee: For serious disputes that can't be resolved through informal discussion, Wikipedia has an Arbitration Committee to mediate and make final decisions on content conflicts.

6. Auditing Tools

- Page History: Each Wikipedia page has a "history" tab, where users can view previous versions of an article, which allows auditors to track changes over time and identify the source of problematic edits.

- Diff Tools: Wikipedia has diff tools that allow users to compare different versions of an article side-by-side to see what was changed and when.

7. Ensuring Compliance with Wikipedia’s Guidelines

- Wikipedia’s Manual of Style: Auditors ensure content adheres to the platform’s established guidelines, including proper formatting, grammar, and style.

- Copyright Compliance: Wikipedia is strict about ensuring that content is freely available and properly attributed. Auditors check that articles do not violate copyright rules and that any external content is properly licensed.