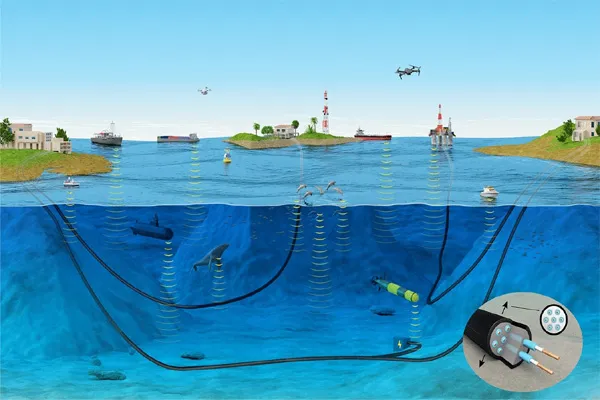

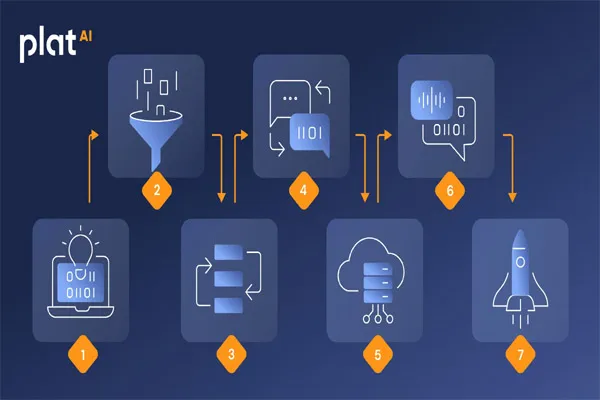

Synthesis Steps:

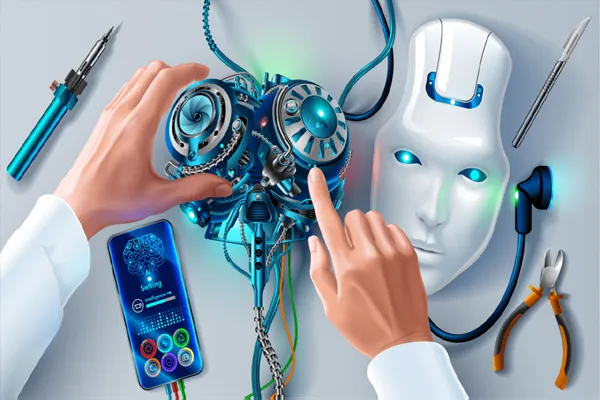

1. Text Analysis: AI analyzes the input text to understand its meaning, context, and content.

2. Tokenization: The text is broken down into individual words or tokens.

3. Embeddings: Each token is converted into a numerical representation (embedding) that captures its semantic meaning.

4. Image Generation: The embeddings are fed into a generative model (e.g., GAN, VAE) that generates an image.

Key AI Architectures:

1. Generative Adversarial Networks (GANs): Consist of two neural networks: generator (creates images) and discriminator (evaluates image realism).

2. Variational Autoencoders (VAEs): Learn to compress and reconstruct images from text embeddings.

3. Transformers: Used for text analysis and embedding generation.

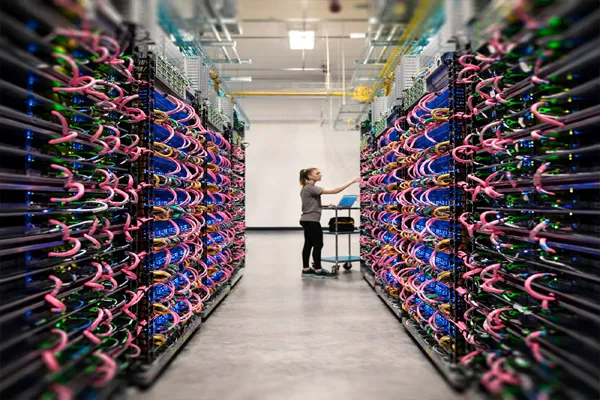

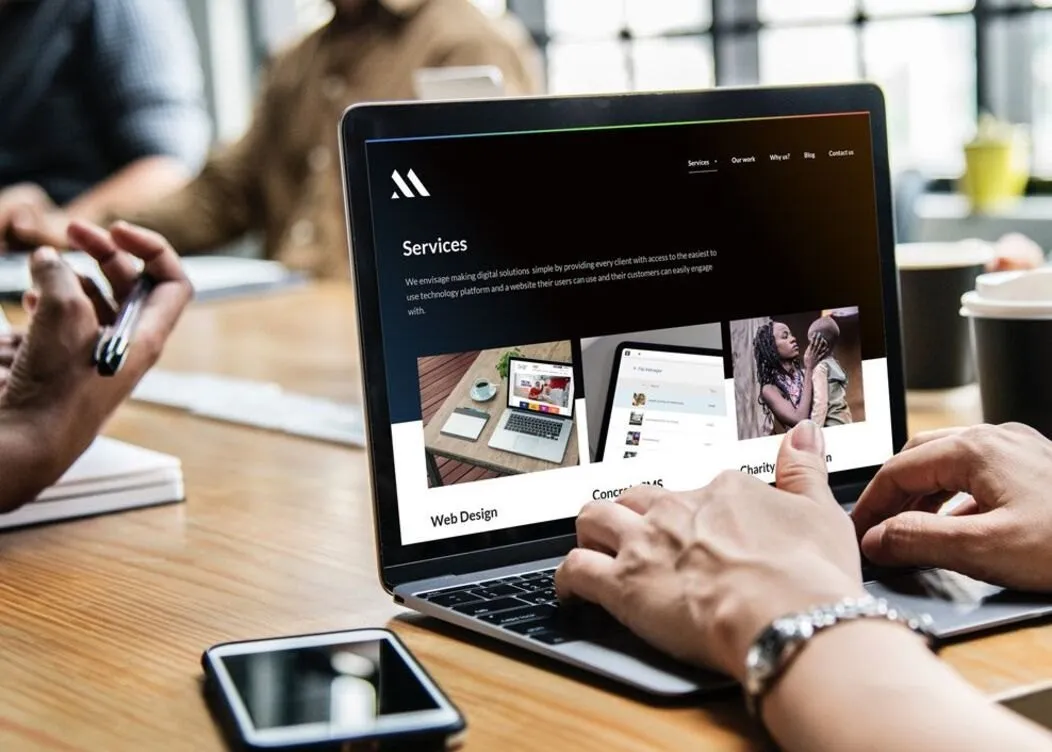

Popular Text-to-Image Models:

1. DALL-E: Generates photorealistic images from text prompts.

2. Midjourney: Creates artistic images from text descriptions.

3. Stable Diffusion: Produces high-quality images from text prompts.

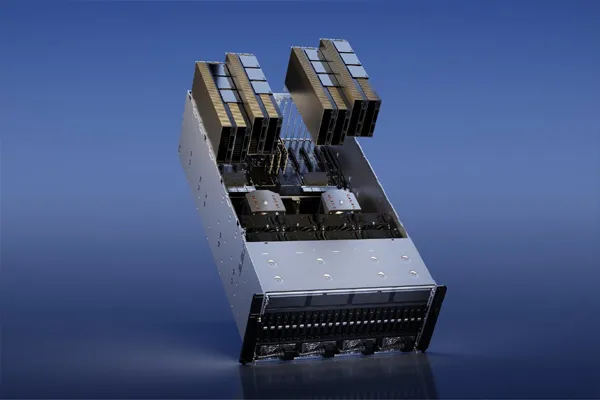

Techniques Used:

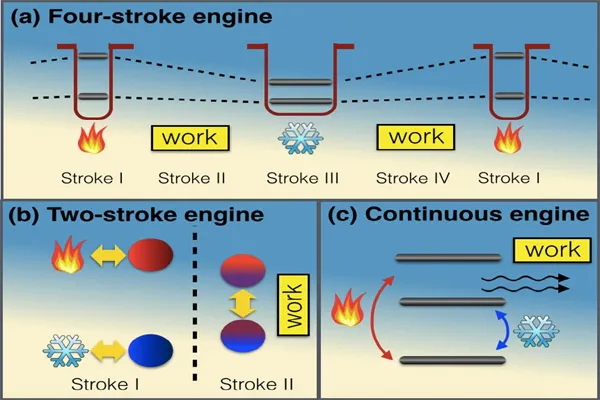

1. Diffusion Models: Gradually refine image generations.

2. Attention Mechanisms: Focus on specific text tokens for image generation.

3. Layer Normalization: Stabilizes image generation.

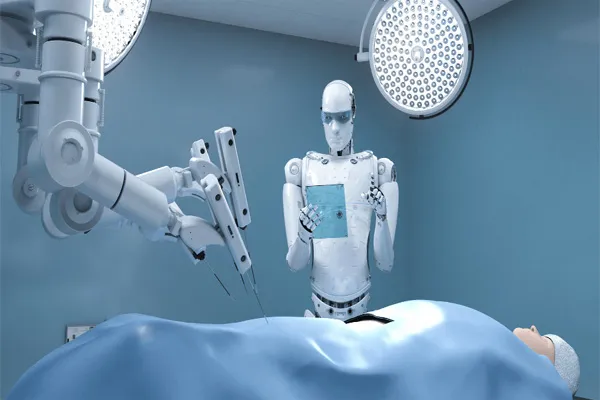

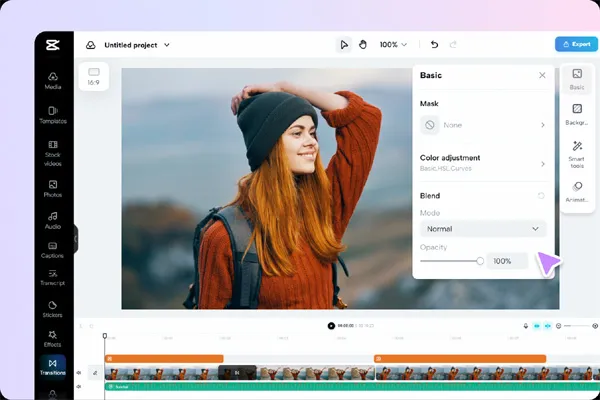

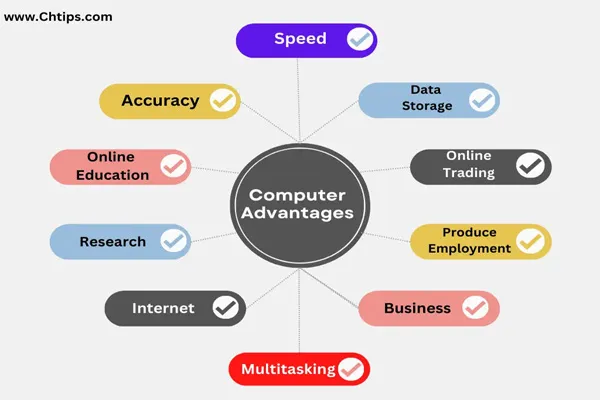

Applications:

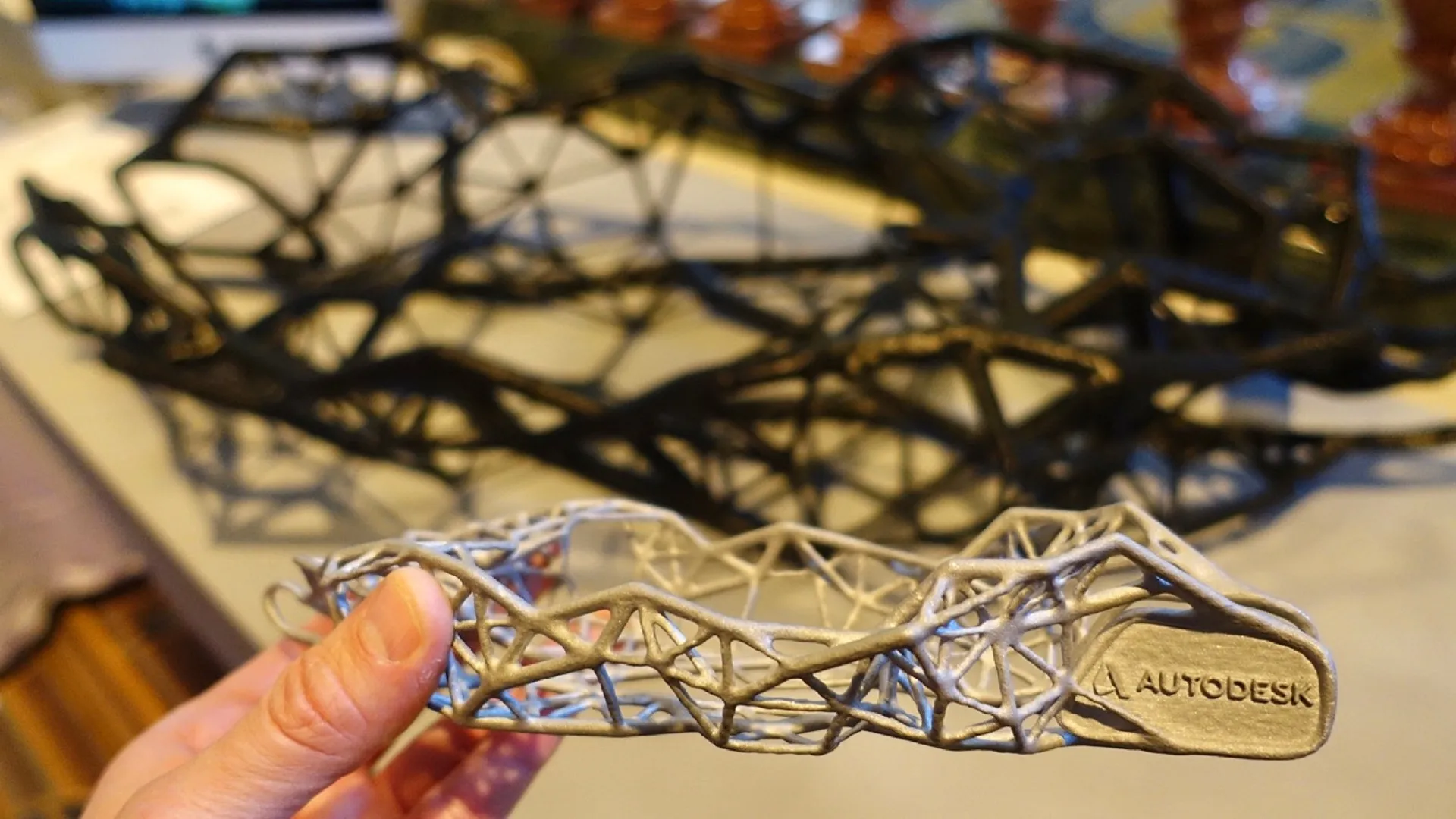

1. Art and Design: Generate artwork, product designs, or architectural visualizations.

2. Advertising: Create customized ads with dynamic images.

3. Virtual Reality: Generate immersive environments.

4. Education: Visualize complex concepts for better understanding.