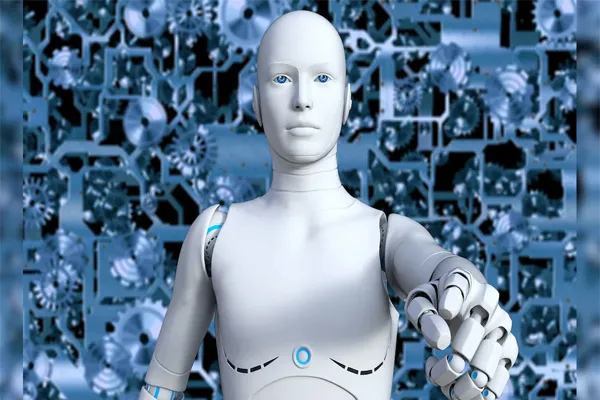

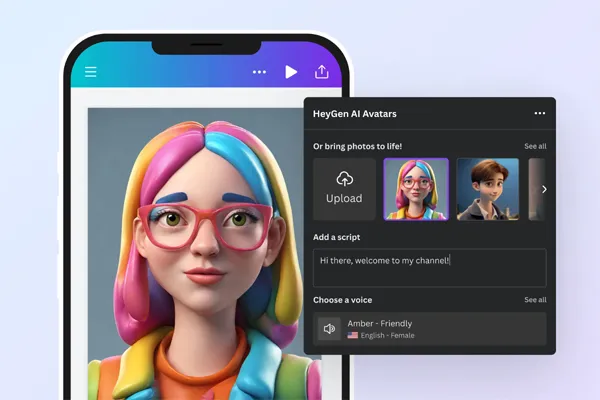

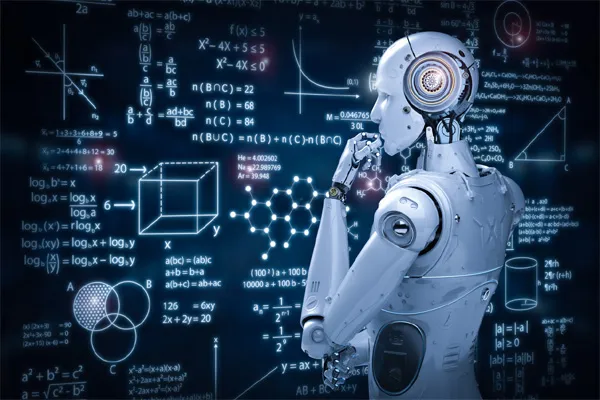

Creating trustworthy AI-generated video content involves addressing ethical concerns, ensuring transparency, and maintaining high-quality output that aligns with user expectations.The content is informed by general knowledge and insights from available web sources, focusing on reliability, ethics, and practical considerations. AI videos are created using artificial intelligence tools that generate or enhance video content from text prompts, images, or audio inputs. These tools, like Synthesia, HeyGen, and Google’s Veo 3, can produce everything from realistic avatars to animated scenes in minutes. As AI videos become more lifelike, concerns about misinformation, deepfakes, and ethical implications grow. Determining their trustworthiness is crucial for creators, businesses, and viewers

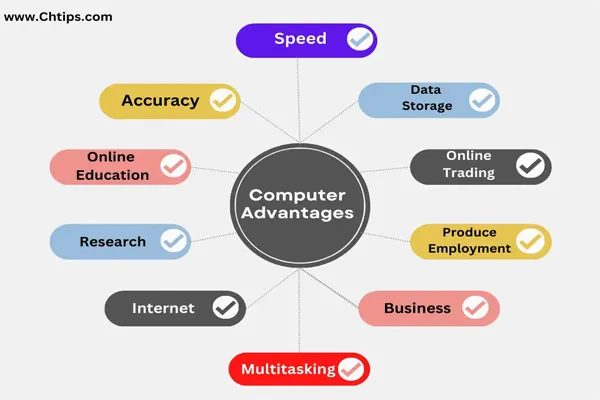

Factors That Influence the Trustworthiness of AI Videos

- Transparency and Disclosure

- Watermarking and Labeling: Reputable platforms like Google’s Veo 3 embed watermarks (e.g., SynthID) to indicate AI-generated content, helping viewers distinguish between real and synthetic media.

- Ethical Platforms: Tools like HeyGen prioritize user trust by adhering to strict data privacy standards (e.g., SOC 2, GDPR, EU AI Act), ensuring content is generated responsibly.

- Challenge: Some platforms or creators may not disclose AI usage, increasing the risk of deceptive content, such as scams or fake endorsements.

- Quality and Realism

- High-Quality Output: Platforms like Synthesia and HeyGen produce studio-quality videos with realistic avatars and voiceovers, making them reliable for professional use (e.g., training, marketing). Users report consistent, high-resolution outputs (720p to 4k) with minimal artifacts.

- Limitations: Some tools, like OpenAI’s Sora, may produce inconsistent results or artifacts (e.g., off-sync narration, unnatural motion), which can undermine trust in certain applications.

- Example: A user noted that Veo 3 delivers cinematic quality with accurate motion and audio, but audio syncing issues persist in some cases, affecting perceived reliability.

- Ethical Considerations

- Misinformation Risks: AI videos can mimic real events or people, raising concerns about deepfakes or manipulated media. For instance, AI-generated videos of disputed events (e.g., riots) can mislead viewers if not clearly marked.

- Safety Measures: Platforms like Canva and deevid.ai implement automated reviews to block inappropriate content, ensuring safer outputs.

- Responsible Use: Ethical platforms encourage creators to use AI videos for legitimate purposes, like education or marketing, rather than deceptive practices.

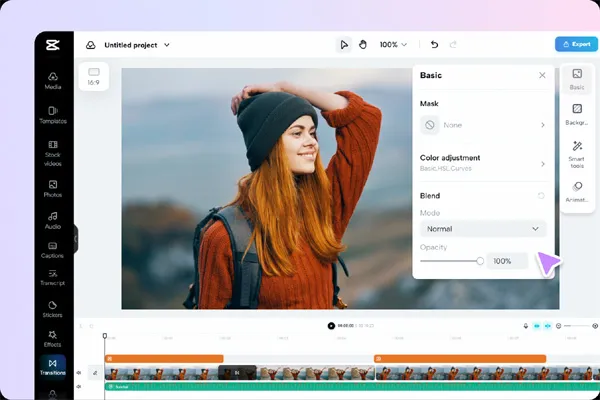

- User Control and Customization

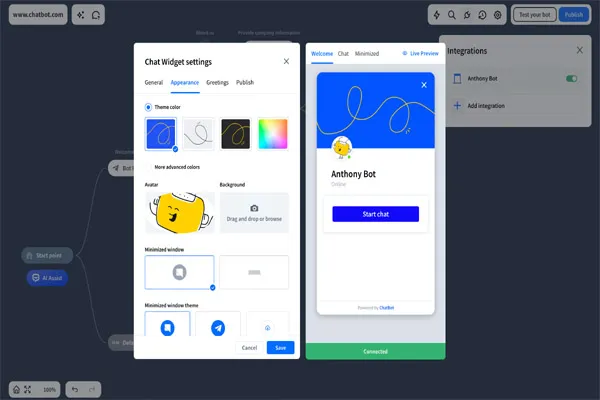

- Creative Control: Tools like Steve AI and Renderforest allow users to fine-tune scripts, visuals, and voiceovers, ensuring the final product aligns with their intent and brand. This control builds trust by reducing reliance on fully automated outputs.

- Ease of Use: Intuitive interfaces (e.g., Elai.io, Invideo AI) make it easy for beginners to create reliable videos, reducing errors that could compromise quality.

- Example: Synthesia users can convert text, PDFs, or PowerPoint slides into polished videos with over 230 avatars, fostering trust through consistent, user-driven results.