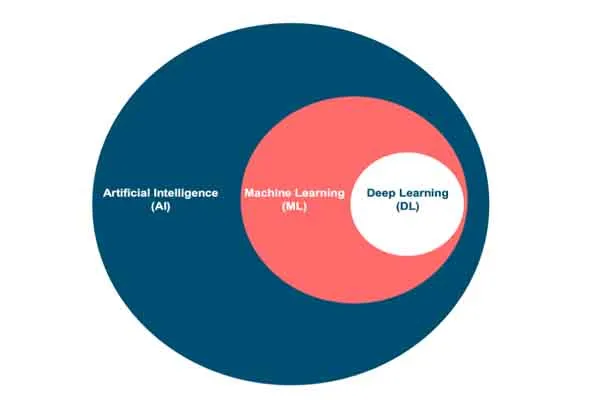

It seems there might be a typo in your request. I assume you meant "How Perplexity AI works without LLM" (Large Language Model). Based on this, I'll address how Perplexity AI might function if it didn’t rely on large language models, though it’s worth noting that Perplexity AI, as it exists today, fundamentally depends on LLMs for its operation. If it were to work without them, it would require a completely different approach. Here's an exploration of that concept:

Perplexity AI, as currently designed, leverages large language models like GPT-4 Omni, Claude 3, and its own fine-tuned models (e.g., Sonar) to process natural language queries, search the web in real time, and deliver concise, conversational answers with citations. Without LLMs, it couldn’t interpret complex queries or generate human-like responses as effectively.

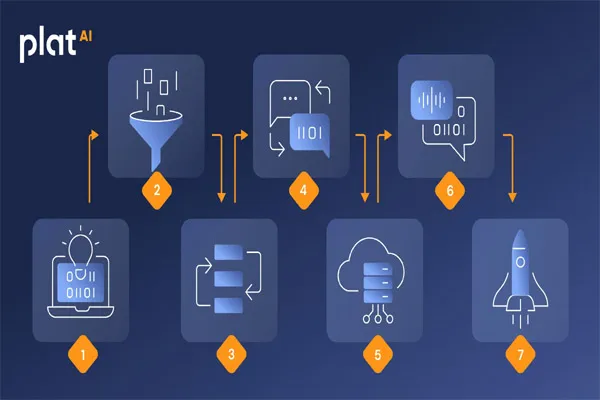

- Keyword-Based Search and Indexing: Without LLMs, Perplexity could revert to traditional search engine techniques, such as keyword matching and web indexing. It would crawl the web, build an index of terms, and rank results based on relevance to the user’s input. This would resemble older search engines like early Google or AltaVista, focusing on exact matches rather than understanding intent or context.

- Rule-Based Natural Language Processing: Instead of LLMs, it could use rule-based NLP systems with predefined grammars and dictionaries. These systems would parse queries into structured components (e.g., subject, verb, object) and map them to a database of prewritten responses or web sources. This approach would be rigid, struggling with ambiguity or conversational nuance, but it could still provide basic answers.

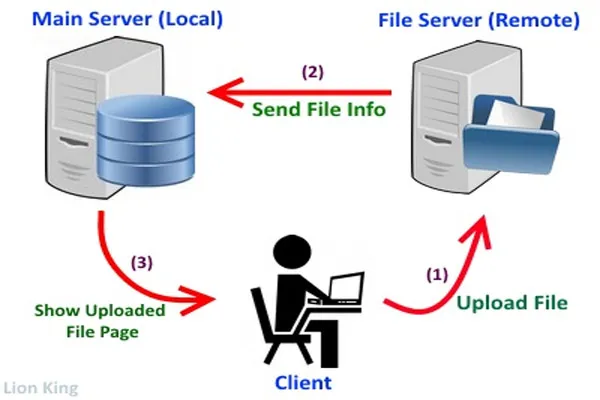

- Web Scraping and Summarization Algorithms: Perplexity could employ web scraping to gather real-time data and use statistical or heuristic algorithms to summarize content. For example, it might extract key sentences based on term frequency (e.g., TF-IDF) or predefined templates rather than generating summaries via LLMs. This would produce less coherent outputs but could still highlight relevant information.

- Database-Driven Responses: Without LLMs, Perplexity might rely on a massive, curated knowledge base—similar to Wolfram Alpha—where answers are precomputed or manually crafted for common queries. It could match user inputs to this database using pattern recognition, bypassing the need for dynamic text generation.

- Citation via Metadata: One of Perplexity’s strengths is citing sources. Without LLMs, it could still achieve this by linking results to metadata from its web index or database, associating answers with their origins based on algorithmic matching rather than language understanding.