When evaluating the performance of a Large Language Model (LLM), the quality of its underlying database—or the data it was trained on—plays a critical role. "Database strength" refers to the robustness, diversity, and reliability of the data, while "clarity" pertains to how well-organized, unambiguous, and accessible that data is for the model to leverage. Measuring these aspects ensures an LLM can generate accurate, coherent, and contextually relevant outputs. Below are key methods and considerations for assessing database strength and clarity in LLMs.

1. Assessing Database Strength

Strength reflects the depth, breadth, and quality of the data powering the LLM. A strong database enables the model to handle a wide range of queries effectively.

- Diversity of Sources

- Evaluate the variety of data origins (e.g., books, websites, scientific papers, social media). A diverse dataset reduces bias and improves generalization.

- Metric: Measure the proportion of unique domains or content types in the training corpus.

- Volume and Coverage

- Analyze the size of the dataset and its coverage across topics, languages, and domains. Larger, well-distributed datasets typically yield stronger models.

- Metric: Quantify total tokens or documents and assess topic distribution using clustering techniques (e.g., LDA).

- Data Quality

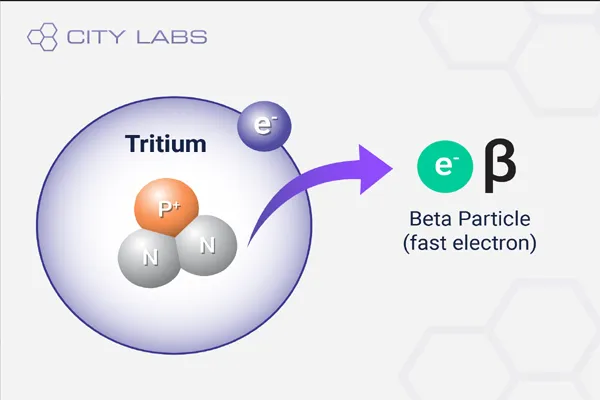

- Check for accuracy, relevance, and reliability of the data. High-quality data minimizes errors and hallucinations in LLM outputs.

- Method: Sample the dataset and manually or algorithmically score factual consistency (e.g., cross-check with verified sources).

- Temporal Relevance

- Ensure the database includes up-to-date information to maintain relevance. Outdated data weakens an LLM’s ability to address current events or trends.

- Metric: Calculate the percentage of data from recent years (e.g., post-2020) or track update frequency.

- Resilience to Noise

- Test how well the database handles noisy, incomplete, or contradictory inputs. A strong database supports robust model performance despite imperfections.

- Method: Introduce synthetic noise (e.g., typos, missing context) and measure output degradation.

2. Evaluating Database Clarity

Clarity ensures the data is structured and presented in a way that the LLM can interpret it effectively, reducing confusion and improving response precision.

- Consistency of Formatting

- Assess whether the data follows uniform conventions (e.g., grammar, syntax, metadata). Inconsistent formatting can confuse the model during training.

- Metric: Analyze variance in sentence structure, punctuation, or tagging across the dataset.

- Ambiguity Reduction

- Identify and quantify ambiguous terms, phrases, or contexts in the data. Clear data minimizes misinterpretation by the LLM.

- Method: Use natural language processing (NLP) tools to flag polysemy or vague references and calculate their frequency.

- Contextual Coherence

- Ensure the database provides sufficient context for the model to understand relationships between concepts. Lack of context leads to unclear or disjointed outputs.

- Metric: Measure average context window size (e.g., surrounding sentences or paragraphs) per data point.

- Labeling and Annotation Quality (if applicable)

- For supervised learning components, evaluate the accuracy and consistency of labels or annotations. Poor labeling muddies the model’s understanding.

- Method: Audit a subset of labeled data for inter-annotator agreement (e.g., Cohen’s Kappa).

- Noise-to-Signal Ratio

- Assess the proportion of irrelevant or redundant data (noise) versus useful information (signal). High clarity means low noise.

- Metric: Use entropy measures or manual sampling to estimate noise levels.

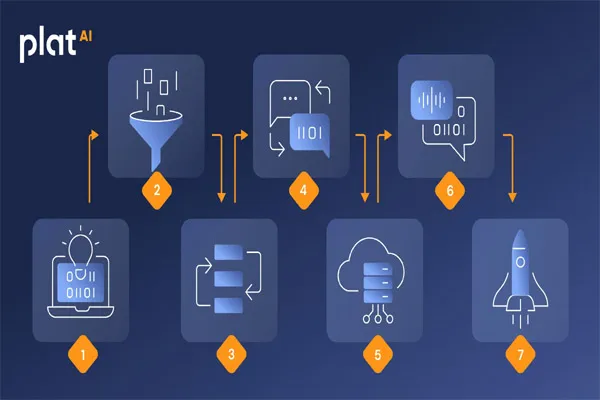

3. Practical Testing Methods

To measure these qualities in practice, you can combine quantitative metrics with qualitative assessments:

- Benchmarking Outputs

- Test the LLM with standardized question sets (e.g., TriviaQA, MMLU) to gauge accuracy and coherence, reflecting database strength and clarity indirectly.

- Example: Compare response quality across diverse domains to infer data coverage.

- Adversarial Testing

- Probe the model with edge cases, ambiguous queries, or misinformation to expose weaknesses tied to the database.

- Example: Ask, “What’s the smell of rain like?” to test abstract reasoning rooted in data clarity.

- Ablation Studies

- Remove portions of the database (e.g., specific sources or time periods) and measure performance drops. This highlights the contribution of each data segment.

- Example: Exclude technical papers and test STEM-related query accuracy.

- User Feedback Analysis

- Collect real-world usage data to identify patterns of confusion or error, pointing to database gaps or ambiguities.

- Example: Track queries where the LLM asks for clarification as a signal of unclear data.

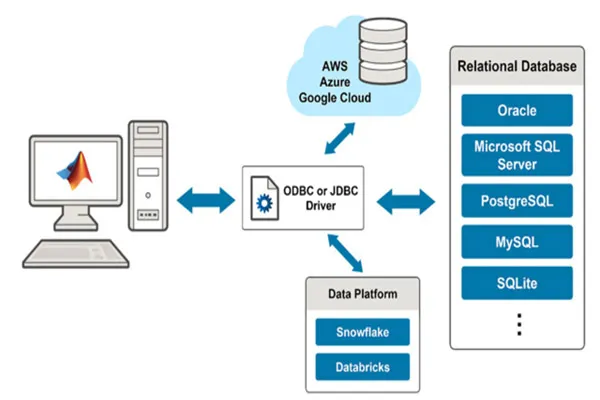

4. Tools and Techniques

Leverage these tools to quantify and improve database strength and clarity:

- NLP Frameworks: Use SpaCy, NLTK, or Hugging Face’s Transformers to analyze text quality and structure.

- Data Visualization: Employ tools like Tableau or Matplotlib to map data distribution and identify gaps.

- Statistical Analysis: Apply metrics like perplexity (for coherence) or BLEU scores (for output quality tied to data).

- Web Scraping/Search: Cross-reference database content with current web data to ensure relevance (if permitted).

5. Improving Database Strength and Clarity

Once measured, enhance the database with these strategies:

- Data Augmentation: Add diverse, high-quality sources to boost strength.

- Cleaning Pipelines: Standardize formatting and remove noise for better clarity.

- Continuous Updates: Integrate fresh data to maintain temporal strength.

- Expert Validation: Use domain experts to refine ambiguous or technical content.